Data Science Distinguished Lecture Series

Cornell’s Center for Data Science for Enterprise and Society’s Data Science Distinguished Lecture Series invites top tier faculty and industry leaders from around the world who are making ground breaking contributions in data science. Invitee’s are curated and selected by an advisory board to the Center. The lectures are a cross-campus event, where each seminar is co-hosted with a flagship lecture series at Cornell, working cooperatively with the Bowers College of Computing and Information Science (including the departments of Computer Science, Information Science, and Statistics and Data Science), The Schools of Operations Research & Information Engineering and Electrical & Computer Engineering in the College of Engineering, and the Departments of Mathematics and of Economics in the College of Arts and Sciences. The mission of the Center is to provide a focal point for data science work, both methodological and application domain-driven. The audience for these lectures span all of Cornell and, given the breadth of the audience, the talks are meant to by accessible to a wide range of graduate students and faculty working in data science.

To receive information on news and events sponsored or promoted by the Center for Data Science for Enterprise and Society, join our e-list by emailing datasciencecenter-L-request@cornell.edu and put “join” in the subject line. Leave the body blank.

Fall 2025: Data Science Distinguished Lecture Series

LARRY WEIN- Abstract and Bio

ABSTRACT The genealogy process is typically the most time-consuming part of — and a limiting factor in the success of — forensic investigative genetic genealogy, which is a new approach that is transforming the way that law enforcement is solving violent crimes. Given a list of distant relatives of the unknown criminal, which is obtained from two databases (GEDmatch and Family Tree DNA), we construct an algorithm that attempts to find the criminal as quickly as possible. Using simulated versions of 17 cases (eight solved, nine unsolved) from the nonprofit DNA Doe Project, we estimate that our algorithm can solve criminal cases 25 times faster than a benchmark strategy. We also describe our recent use of the algorithm to attempt to solve stalled cold cases with genealogist Barbara Rae-Venter, who solved the Golden State Killer case.

BIO Lawrence M. Wein is the Jeffrey S. Skoll Professor of Management Science at the Graduate School of Business, Stanford University, where he was a Senior Associate Dean of Academic Affairs during 2018-2024. He received a Ph.D. in Operations Research at Stanford University in 1988 and was a professor at MIT’s Sloan School of Management from 1988 to 2002. His research interests are in operations management and public health. He was Editor-in-Chief of Operations Research from 2000 to 2005. He has been awarded a Presidential Young Investigator Award, the Erlang Prize, the Koopman Prize, the INFORMS Expository Writing Award, the Philip McCord Morse Lectureship, the INFORMS President’s Award, the Frederick W. Lanchester Prize, the George E. Kimball Medal, a best paper award from Risk Analysis, and a notable paper award from the Journal of Forensic Sciences. He is an INFORMS Fellow, a M&SOM Fellow and a member of the National Academy of Engineering.

This event is a University Lecture and Data Science Distinguished Lecture co-sponsored with the Center for Data Science for Enterprise and Society, the SC Johnson College of Business, and the School of Operations Research and Information Engineering.

Spring 2025: Data Science Distinguished Lecture Series

DAVID RAND – Abstract and Bio

ABSTRACT This talk will provide an overview of my research on misinformation, social media, and generative AI. First, I will discuss approaches for reducing the spread of misinformation on social media. This will focus on research showing that misinformation sharing is often due to inattention rather than intentional deception, and therefore that simple accuracy prompts can significantly reduce the spread of low-quality news, as shown in both survey experiments and field experiments with millions of users on Facebook and Twitter. Then I will discuss work on using large language models to correct inaccurate beliefs. I will briefly discuss work showing that brief human-AI dialogues can meaningfully reduce conspiracy beliefs, and then describe a number of other applications of human-AI dialogues to address misconceptions related to topics including race, vaccination, public health policy, and climate health. Together, this work sheds light on why misinformation spreads and potential solutions to combat its pernicious effects.

BIO David Rand is the Erwin H. Schell Professor and Professor of Management Science and Brain and Cognitive Sciences at MIT. As an interdisciplinary computational social scientist, David’s research combines behavioral experiments and online/field studies with mathematical and computational models to understand human decision-making. His work focuses on illuminating why people believe and share misinformation on social media and what to do about it; how human-AI interactions can correct inaccurate beliefs; understanding political psychology and polarization; and promoting human cooperation. He has published over 200 articles in peer-reviewed journals such Nature, Science, PNAS, the American Economic Review, Psychological Science, Management Science, New England Journal of Medicine, and the American Journal of Political Science, and his work has received widespread media attention. David has frequently advised technology companies such as Google, Meta, and TikTok in their efforts to combat misinformation, and has provided testimony about misinformation to the US and UK governments. He has also written for popular press outlets including the New York Times, Wired, and New Scientist. He was named to Wired magazine’s Smart List 2012 of “50 people who will change the world,” chosen as a 2012 Pop!Tech Science Fellow, awarded the 2015 Arthur Greer Memorial Prize for Outstanding Scholarly Research, chosen as fact-checking researcher of the year in 2017 by the Poyner Institute’s International Fact-Checking Network, awarded the 2020 FABBS Early Career Impact Award from the Society for Judgment and Decision Making, and selected as a 2021 Best 40-Under-40 Business School Professor by Poets & Quants. Papers he has coauthored have been awarded Best Paper of the Year in Experimental Economics, Social Cognition, and Political Methodology.

PANOS TOULIS – Abstract and Bio

Randomization Tests for Robust Causal Inference in Network Experiments

ABSTRACT Network experiments pose unique challenges for causal inference due to interference, where cause-effect relationships are confounded by network interactions among experimental units. This paper focuses on group formation experiments, where individuals are randomly assigned to groups and their responses are observed—for example, do first-year students achieve better grades when randomly paired with academically stronger roommates? We extend classical Fisher Randomization Tests (FRTs) to this setting, resulting in tests that are exact in finite samples and justified solely by the randomization itself. We also establish sufficient theoretical conditions under which general FRTs for network peer effects reduce to computationally efficient permutation tests. Our analysis identifies equivariance as a key algebraic property ensuring the validity of permutation tests under network interference.

BIO Panos Toulis is an Associate Professor of Econometrics and Statistics and a John E. Jeuck Faculty Fellow at the Booth School of Business. His research interests include causal inference, experimental design, and statistical machine learning. His applied work primarily focuses on social networks, including school absenteeism, peer effects, crime spillovers, and taxation policy. He has received a faculty award from Adobe, an Economic Graph Challenge award from LinkedIn, the Tom Ten Have Award in causal inference, the Arthur P. Dempster Award from Harvard University, and the Google U.S./Canada PhD Fellowship in Statistics. His research has also been supported by NSF research grants

PETER CRAMTON: Abstract and Bio

ABSTRACT We propose a forward electricity market design that enables efficient and granular hedging in the face of growing electrification and intermittent renewables. Market participants can trade thousands of forward contracts and European call options with a high strike price (e.g., $1,000/MWh), each tailored to granular delivery windows spanning up to four years ahead. To implement this market, we apply Budish et al.’s (2023) flow trading framework to electricity markets. It encourages incremental trading and supports liquidity provision via frequent batch auctions. (This is joint work with Simon Brandkamp, Jason Dark, Darrell Hoy, David Malec, Axel Ockenfels, and Chris Wilkens.)

BIO Peter Cramton is Professor of Economics Emeritus at the University of Maryland and International Research Fellow at the Max Planck Institute for Research on Collective Goods. Since 1983, he has conducted research on auction theory and practice. This research appears in leading economics journals. The focus is the design of auction-based markets. Applications include communications, electricity, and financial markets. He is a co-inventor of the spectrum auction design used in Canada, Australia, and many European countries to auction spectrum. Since 2001, he has played a lead role in the design and implementation of electricity and gas auctions in North America, South America, and Europe. He has advised on the design of carbon auctions in Europe, Australia, and the United Sates, including conducting the world’s first greenhouse-gas auction held in the UK in 2002.

Zoom link for talk

Passcode: 785058

Meeting ID: 922 5712 1736

YUEJIE CHI – Abstract and Bio

Federated Reinforcement Learning: Statistical, Communication and Computation Trade-offs

Abstract: Reinforcement learning (RL), concerning decision making in uncertain environments, lies at the heart of modern artificial intelligence. Due to the high dimensionality, training of RL agents typically requires a significant amount of computation and data to achieve desirable performance. However, data collection can be extremely time-consuming with limited access in real-world applications, especially when performed by a single agent. On the other hand, it is plausible to leverage multiple agents to collect data simultaneously, under the premise that they can learn a global policy collaboratively without the need of sharing local data in a federated manner. This talk addresses the fundamental statistical, communication and computation trade-offs in the algorithmic designs of federated RL algorithms, covering both blessings and curses in the presence of data and task heterogeneities across the agents.

Bio: Dr. Yuejie Chi is the Sense of Wonder Group Endowed Professor of Electrical and Computer Engineering in AI Systems at Carnegie Mellon University, with courtesy appointments in the Machine Learning department and CyLab. She received her Ph.D. and M.A. from Princeton University, and B. Eng. (Hon.) from Tsinghua University, all in Electrical Engineering. Her research interests lie in the theoretical and algorithmic foundations of data science, signal processing, machine learning and inverse problems, with applications in sensing, imaging, decision making, and AI systems. Among others, Dr. Chi received the Presidential Early Career Award for Scientists and Engineers (PECASE), SIAM Activity Group on Imaging Science Best Paper Prize, IEEE Signal Processing Society Young Author Best Paper Award, and the inaugural IEEE Signal Processing Society Early Career Technical Achievement Award for contributions to high-dimensional structured signal processing. She is an IEEE Fellow (Class of 2023) for contributions to statistical signal processing with low-dimensional structures.

DEANNA NEEDELL – Abstract and Bio

ABSTRACT In this talk, we will address areas of recent work centered around the themes of fairness and foundations in machine learning as well as highlight the challenges in this area. We will discuss recent results involving linear algebraic tools for learning, such as methods in non-negative matrix factorization that include tailored approaches for fairness. We will showcase our approach as well as practical applications of those methods. Then, we will discuss new foundational results that theoretically justify phenomena like benign overfitting in neural networks. Throughout the talk, we will include example applications from collaborations with community partners, using machine learning to help organizations with fairness and justice goals.

BIO Deanna Needell earned her PhD from UC Davis before working as a postdoctoral fellow at Stanford University. She is currently a full professor of mathematics at UCLA, the Dunn Family Endowed Chair in Data Theory, and the Executive Director for UCLA’s Institute for Digital Research and Education. She has earned many awards including the Alfred P. Sloan fellowship, an NSF CAREER and other awards, the IMA prize in Applied Mathematics, is a 2022 American Mathematical Society (AMS) Fellow and a 2024 Society for industrial and applied mathematics (SIAM) Fellow. She has been a research professor fellow at several top research institutes including the SLMath (formerly MSRI) and Simons Institute in Berkeley. She also serves as associate editor for several journals including Linear Algebra and its Applications and the SIAM Journal on Imaging Sciences, as well as on the organizing committee for SIAM sessions and the Association for Women in Mathematics.

CYNTHIA DWORK – Talk Details

MESSENGER LECTURE SERIES

Fall 2024: Data Science Distinguished Lecture Series

Friday, December 6

Johan Ugander, Stanford University

Management Science & Engineering

3:45PM – 4:45PM

Rhodes Hall 655

This lecture is part of the CAM Colloquium and co-hosted with the School of Operations Research and Information Engineering

Talk recording not available.

Bridging-Based Fact-Checking Moderates the Diffusion of False Information on Social Media

Social networks scaffold the diffusion of information on social media. Much attention has been given to the spread of true vs. false content on social media, including the structural differences between their diffusion patterns. However, much less is known about how platform interventions on false content alter the diffusion of such content. In this work, we estimate the causal effects of a novel fact-checking feature, Community Notes, adopted by Twitter (now X) to solicit and vet crowd-sourced fact-checking notes for false content. An important aspect of this feature is its use of a bridging-based decision algorithm whereby fact-checking notes are shown only if they are seen as broadly informative and helpful by users from across the political spectrum. To estimate the causal effect of bridging-based fact-checking, we gather detailed time series data for 40,000 posts for which notes have been proposed and use synthetic control methods to produce counterfactual estimates of a range of diffusion-based outcomes. We find that attaching fact-checking notes significantly reduced the reach of and engagement with false content. In reducing reach, we observe that diffusion trees for fact-checked content are less deep, but not less broad, than synthetic control estimates for non-fact-checked content with similar reach. This finding contrasts notably with differences between false vs. true content, where false information diffuses farther, but with structural patterns that are otherwise indistinguishable from those of true information, conditional on reach.

Social networks scaffold the diffusion of information on social media. Much attention has been given to the spread of true vs. false content on social media, including the structural differences between their diffusion patterns. However, much less is known about how platform interventions on false content alter the diffusion of such content. In this work, we estimate the causal effects of a novel fact-checking feature, Community Notes, adopted by Twitter (now X) to solicit and vet crowd-sourced fact-checking notes for false content. An important aspect of this feature is its use of a bridging-based decision algorithm whereby fact-checking notes are shown only if they are seen as broadly informative and helpful by users from across the political spectrum. To estimate the causal effect of bridging-based fact-checking, we gather detailed time series data for 40,000 posts for which notes have been proposed and use synthetic control methods to produce counterfactual estimates of a range of diffusion-based outcomes. We find that attaching fact-checking notes significantly reduced the reach of and engagement with false content. In reducing reach, we observe that diffusion trees for fact-checked content are less deep, but not less broad, than synthetic control estimates for non-fact-checked content with similar reach. This finding contrasts notably with differences between false vs. true content, where false information diffuses farther, but with structural patterns that are otherwise indistinguishable from those of true information, conditional on reach.

Bio: Johan Ugander is an Associate Professor at Stanford University in the Department of Management Science & Engineering, within the School of Engineering. His research develops algorithmic and statistical frameworks for analyzing social networks, social systems, and other large-scale social and behavioral data. Prior to joining the Stanford faculty he was a postdoctoral researcher at Microsoft Research Redmond 2014-2015 and held an affiliation with the Facebook Data Science team 2010-2014. He obtained his Ph.D. in Applied Mathematics from Cornell University in 2014. His awards include a NSF CAREER Award, a Young Investigator Award from the Army Research Office (ARO), several Best Paper Awards, and the 2016 Eugene L. Grant Undergraduate Teaching Award from the Department of Management Science & Engineering.

Friday, November 21

Adam Tauman Kalai, OpenAI

Research Scientist

11:45 AM

Gates Hall, G01

Recording of Adam’s talk (net id required)

This talk is co-hosted with the CS Colloquium

First-Person Fairness in Chatbots

Much research on fairness has focused on institutional decision-making tasks, such as resume screening. Meanwhile, hundreds of millions of people use chatbots like ChatGPT for very different purposes, ranging from resume writing and technical support to entertainment. We study “first-person fairness,” which means fairness toward the user who is interacting with a chatbot. This includes providing high-quality responses to all users regardless of their identity or background, and avoiding harmful stereotypes. We propose a scalable, privacy-preserving method for evaluating one aspect of first-person fairness across a large, heterogeneous corpus of real-world chatbot interactions. Specifically, we assess potential bias linked to users’ names—which can serve as proxies for demographic attributes like gender or race—in chatbot systems like ChatGPT that can store and utilize user names. Our method leverages a second language model to privately analyze name-sensitivity in the chatbot’s responses. We verify the validity of these annotations through independent human evaluation. In addition to quantitative bias measurements, our approach also identifies common tasks, such as “career advice” or “writing a story” and gives succinct descriptions of subtle response differences across tasks. Finally, we publish the system prompts necessary for others to conduct similar experiments that faithfully simulate ChatGPT conversations with arbitrary user profiles.

This is joint work with Tyna Eloundou, Alex Beutel, David G. Robinson, Keren Gu-Lemberg, Anna-Luisa Brakman, Pamela Mishkin, Meghan Shah, Johannes Heidecke, and Lilian Weng.

Bio: Adam Tauman Kalai is a Research Scientist at OpenAI working on AI Safety and Ethics. He has worked in Algorithms, Fairness, Machine Learning Theory, Game Theory, and Crowdsourcing. He received his PhD from Carnegie Mellon University. He has served as an Assistant Professor at Georgia Tech and the Toyota Technological Institute at Chicago. He is a member of the science team of Project CETI, an interdisciplinary initiative to understand the communication of sperm whales. He has co-chaired AI and crowdsourcing conferences including COLT (the Conference on Learning Theory), HCOMP (the Conference on Human Computation) and NEML. His honors include the Majulook prize, best paper awards, an NSF CAREER award, and an Alfred P. Sloan fellowship.

Friday, November 15

Mengdi Wang, Princeton University

Center for Statistics and Machine Learning, Department of Electrical Engineering

3:45 – 4:45PM

Frank H.T. Rhodes Hall, 655

this talk is co-hosted with the CAM Colloquium and ORIE Colloquium

Capitalizing on Generative AI: Guided Diffusion Models Towards Generative Optimization

Diffusion models represent a significant breakthrough in generative AI, operating by progressively transforming random noise distributions into structured outputs, with adaptability for specific tasks through training-free guidance or selfplay fine-tuning. In this presentation, we delve into the statistical and optimization aspects of diffusion models and establish their connection to theoretical optimization frameworks. We explore how unconditioned diffusion models efficiently capture complex high-dimensional data, particularly when low-dimensional structures are present. Further, we leverage our understanding of diffusion models to introduce a pioneering optimization method termed “generative optimization.” Here, we harness diffusion models as data-driven solution generators to maximize any user-specified reward function. We introduce gradient-based guidance to guide the sampling process of a diffusion model while preserving the learnt low-dim data structure. We show that adapting a pre-trained diffusion model with guidance is essentially equivalent to solving a regularized optimization problem. Additionally, we propose to find global optimizers through iterative self-play and fine-tuning the score network using new samples together with gradient guidance. This process mimics a first-order optimization iteration, for which we established convergence to the global optimum while maintaining intrinsic structures within the training data. Finally, we demonstrated the applications of these methods in life science, engineering designs, and video generation.

Bio: Mengdi Wang is Co-Director of Princeton AI for Accelerated Invention, and associate professor at the Department of Electrical and Computer Engineering and the Center for Statistics and Machine Learning at Princeton University. She is also affiliated with the Department of Computer Science, Omenn-Darling Bioengineering Institute, and Princeton Language+Intelligence. She was a visiting research scientist at Google DeepMind, IAS and Simons Institute on Theoretical Computer Science. Her research focuses on machine learning, reinforcement learning, generative AI, large language models, and AI for science.

Mengdi received her PhD in Electrical Engineering and Computer Science from Massachusetts Institute of Technology in 2013, where she was affiliated with the Laboratory for Information and Decision Systems and advised by Dimitri P. Bertsekas. Before that, she got her bachelor degree from the Department of Automation, Tsinghua University. Mengdi received the Young Researcher Prize in Continuous Optimization of the Mathematical Optimization Society in 2016 (awarded once every three years), the Princeton SEAS Innovation Award in 2016, the NSF Career Award in 2017, the Google Faculty Award in 2017, and the MIT Tech Review 35-Under-35 Innovation Award (China region) in 2018, WAIC YunFan Award 2022, American Automatic Control Council’s Donald Eckman Award 2024 for “extraordinary contributions to the intersection of control, dynamical systems, machine learning and information theory”. She serves as a Program Chair for ICLR 2023 and Senior AC for Neurips, ICML, COLT, associate editor for Harvard Data Science Review, Operations Research. Research supported by NSF, AFOSR, NIH, ONR, Google, Microsoft C3.ai, FinUP, RVAC Medicines, MURI, GenMab.

Monday – Wednesday, November 11 – 13

Sendhil Mullainathan

Sendhil, Mullainathan, The Peter de Florez Professor, In Electrical Engineering and Computer Science, and in Economics, at MIT will be on campus as part of the Dean of Faculty Messenger Lecture series and the Data Science Distinguished Lecture series for the Center for Data Science for Enterprise and Society. Professor Mullainathan will be giving three talks Monday Nov. 11 – Wednesday, Nov. 13.

MESSENGER LECTURES:

Monday, November 11

PUBLIC LECTURE: Tools of Thought: Building Algorithms that Enhance Human Capacity

Time: 3:45 pm – 4:45 pm

Location: Baker Lab, 200 (259 East Ave)

reception following in the Baker Portico

The biggest proponents for AI lack no confidence. We are close, they argue, to being able to build algorithms that do everything humans can. The problem with this vision is not hubris, quite the opposite. I will argue that it lacks ambition. Computing will have the biggest (positive) impact on society not from algorithms doing what we already do cheaper or in silico; but from doing things that humans cannot even dream of. In effect, we want thought partners, not substitutes. Building such algorithms requires that we integrate our knowledge of people with our knowledge of computing. I will illustrate with several examples.

Tuesday, November 12

Incorporating Behavioral Science into Computational Science

Co-sponsored with the CS Colloquium and the ORIE Seminar

Time: 11:45 am – 12:45 pm

Location: Gates Hall, G01

reception prior to talk

I will describe several projects that incorporate our understanding of humans into how we build and evaluate algorithmic systems, such as supervised learners or large language models.

Wednesday, November 13

Incorporating Algorithms into Economics and Policy

Co-sponsored with the Law, Economics, and Policy Seminar

Time: 1:30pm – 2:45 pm

Location: ILR Conference Center, Room 423

I will describe how algorithms can have outsized impact on public policy specifically; and economics more broadly.

BIO: Professor Mullainathan is a recipient of the MacArthur “Genius Grant,” has been designated a “Young Global Leader” by the World Economic Forum, was labeled a “Top 100 Thinker” by Foreign Policy Magazine, and was named to the “Smart List: 50 people who will change the world” by Wired Magazine (UK). He recently co-authored the book Scarcity: Why Having too Little Means so Much and writes regularly for the New York Times. Professor Mullainathan helped co-found a non-profit to apply behavioral science (ideas42), co-founded a center to promote the use of randomized control trials in development (the Abdul Latif Jameel Poverty Action Lab), serves on the board of the MacArthur Foundation, has worked in government in various roles, is affiliated with the NBER and BREAD, and is a member of the American Academy of Arts and Sciences.His current research uses machine learning to understand complex problems in human behavior, social policy, and especially medicine, where computational techniques have the potential to uncover biomedical insights from large-scale health data.

All talks will be available on Zoom:

https://cornell.zoom.us/j/92499118564?pwd=BgykAkyzsO5LkaWnD3HaTuH3aUGnhr.1&from=addon#success

ZOOM Meeting ID: 924 9911 8564

Passcode: 388714

Friday, September 20

Tom Magnanti

Ray Fulkerson: Awe-inspiring Pioneer of network Flows, Optimization, and Combinatorial Anaylysis

Part of the D.R. Fulkerson Centennial Celebration hosted by the School of Operations Research and Information Engineering

Abstract: Very few individuals, like Delbert Ray Fulkerson, have defined a field. Ray was a mathematician who pioneered research in theory and applications. This presentation will summarize for a general audience some of his fundamental contributions to flows on networks, linear and integer programming, and combinatorial analysis. With Lester Ford, he developed network flow theory, and with George Dantzig and others what he developed methods for solving linear and integer programming, including such topics as primal-dual methods, cutting planes, and column generation for solving large scale optimization problems. He made signature contributions to extreme combinatorics, with novel and elegant results on pairs of polyhedra and perfect graphs. He contributed to applications in project scheduling, tanker scheduling, railway planning, logistics, transportation planning, and the traveling salesman problem. He was a masterful individual with superb insight, ingenuity, creativity, and elegance.

Bio: Dr. Magnanti has spent his entire academic career at MIT where he is an Institute Professor and was formerly Dean of the School of Engineering and head of the Management Science area of the MIT Sloan School of Management among many other leadership roles. Much of his professional career has been devoted to education that combines engineering and management and to teaching and research in applied and theoretical aspects of large-scale optimization in areas such as production planning and scheduling, transportation planning, facility location, networks, logistics, and communication systems design. He is an inaugural Fellow and past president of INFORMS, past president of ORSA, a member of the National Academy of Engineering, and the American Academy of Arts and Sciences. His awards include the Lanchester Prize (1993) and the George E. Kimball Medal (1994) and awards at MIT for educational innovations and distinguished service. He has served on the advisory boards and board of directors for several leading universities and corporations.

Spring 2024: Data Science Distinguished Lecture Series

Wednesday, April10

Bodhi Sen, Columbia

Extending the Scope of Nonparametric Empirical Bayes

Co-sponsored with the Department of Statistics and Data Science

Abstract: In this talk we will describe two applications of empirical Bayes (EB) methodology. EB procedures estimate the prior probability distribution in a latent variable model or Bayesian model from the data. In the first part we study the (Gaussian) signal plus noise model with multivariate, heteroscedastic errors. This model arises in many large-scale denoising problems (e.g., in astronomy). We consider the nonparametric maximum likelihood estimator (NPMLE) in this setting. We study the characterization, uniqueness, and computation of the NPMLE which estimates the unknown (arbitrary) prior by solving an infinite-dimensional convex optimization problem. The EB posterior means based on the NPMLE have low regret, meaning they closely target the oracle posterior means one would compute with the true prior in hand. We demonstrate the adaptive and near-optimal properties of the NPMLE for density estimation, denoising and deconvolution.

In the second half of the talk, we consider the problem of Bayesian high dimensional regression where the regression coefficients are drawn i.i.d. from an unknown prior. To estimate this prior distribution, we propose and study a “variational empirical Bayes” approach — it combines EB inference with a variational approximation (VA). The idea is to approximate the intractable marginal log-likelihood of the response vector — also known as the “evidence” — by the evidence lower bound (ELBO) obtained from a naive mean field (NMF) approximation. We then maximize this lower bound over a suitable class of prior distributions in a computationally feasible way. We show that the marginal log-likelihood function can be (uniformly) approximated by its mean field counterpart. More importantly, under suitable conditions, we establish that this strategy leads to consistent approximation of the true posterior and provides asymptotically valid posterior inference for the regression coefficients.

Bio: Bodhi Sen is a Professor of Statistics at Columbia University, New York. He completed his Ph.D in Statistics from University of Michigan, Ann Arbor, in 2008. Prior to that, he was a student at the Indian Statistical Institute, Kolkata, where he received his Bachelors (2002) and Masters (2004) in Statistics. His core statistical research centers around nonparametrics — function estimation (with special emphasis on shape constrained estimation), theory of optimal transport and its applications to statistics, empirical Bayes procedures, kernel methods, likelihood and bootstrap based inference, etc. He is also actively involved in interdisciplinary research, especially in astronomy.

His honors include the NSF CAREER award (2012), and the Young Statistical Scientist Award (YSSA) in the Theory and Methods category from the International Indian Statistical Association (IISA). He is an elected fellow of the Institute of Mathematical Statistics (IMS).

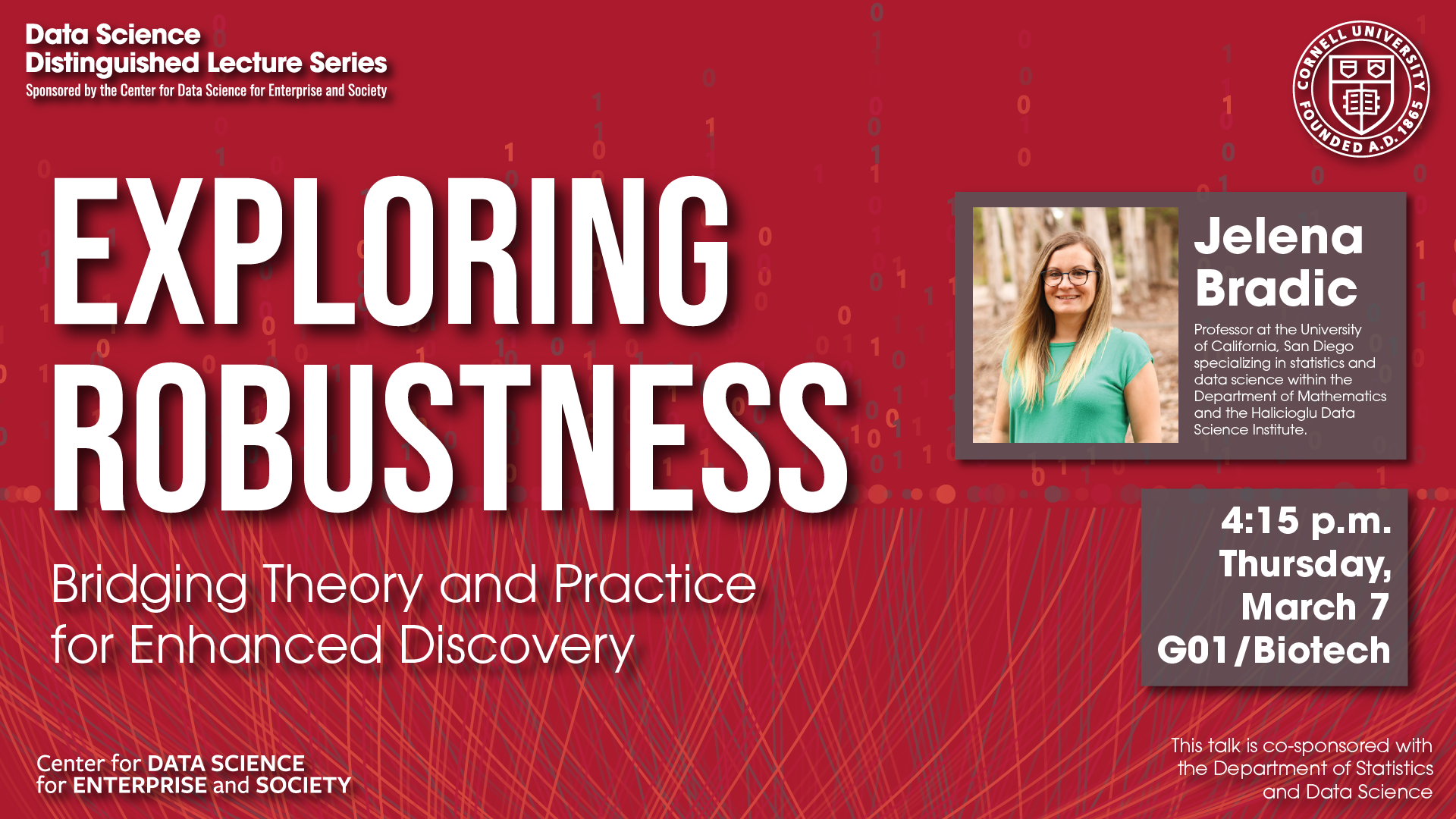

Thursday, March 7

Jelena Bradic, UC San Diego

Exploring Robustness: Bridging Theory and Practice for Enhanced Discovery

Co-sponsored with the Department of Statistics and Data Science

ABSTRACT This talk seeks to redefine the boundaries of statistical robustness. For too long, the field has languished in the shadows of contamination models, adversarial constructs, and outlier management—approaches that, while foundational, scarcely scratch the surface of potential that model misspecification offers. Our research reveals a fundamental link between robustness and causality, initiating an innovative era in data science. This era is defined by how causality enhances robustness, and in turn, how effectively applied robustness opens up unprecedented opportunities for scientific exploration.

BIO Jelena Bradic is a Professor at the University of California, San Diego, where she specializes in statistics and data science within the Department of Mathematics and the Halicioglu Data Science Institute. Her research focuses on developing robust statistical methods that are resistant to model misspecification, with particular emphasis on high-dimensional data analysis, causal inference, and machine learning applications. Bradic holds a Ph.D. from Princeton University and has made significant contributions to the field of statistics. She is the co-editor in Chief of the first interdisciplinary journal between ACM and IMS named ACM/IMS Journal of Data Science, and the recipient of several prestigious awards, including the Wijsman Lecture (2023), a Discussion Paper in the Journal of the American Statistical Association (2020) and a Hellman Fellowship, recognizing her as a leading figure in statistical science.

Monday, March 4

Suvrit Sra, MIT

AI and Optimization Through a Geometric Lens

Co-sponsored with the School of Operations Research and Information Engineering

Abstract: Geometry arises in a myriad ways across the sciences, and quite naturally also within AI and optimization. In this talk I wish to share with you examples where geometry helps us understand problems in machine learning, optimization, and sampling. For instance, when sampling from densities supported on a manifold, understanding geometry and the impact of curvature are crucial; surprisingly, progress on geometric sampling theory helps us understand certain generalization properties of SGD for deep-learning! Another fascinating viewpoint afforded by geometry is in non-convex optimization: geometry can either help us make training algorithms more practical (e.g., in deep learning), it can reveal tractability despite non-convexity (e.g., via geodesically convex optimization), or it can simply help us understand important ideas better (e.g., eigenvectors, LLM training, etc.).

Ultimately, my hope is to offer the audience insights into geometric thinking, and to share with them some new tools that can help us make progress on modeling, algorithms, and applications. To make my discussion concrete, I will recall a few foundational results arising from our research, provide several examples, and note some open problems.

Bio: Suvrit Sra is an Alexander von Humboldt Professor of AI at the Technical University of Munich (Germany), and an Associate Professor of EECS at MIT (USA), where he is also a member of the Laboratory for Information and Decision Systems (LIDS) and of the Institute for Data, Systems, and Society (IDSS). He obtained his PhD in Computer Science from the University of Texas at Austin. Before TUM & MIT, he was a Senior Research Scientist at the Max Planck Institute for Intelligent Systems, Tübingen, Germany. He has held visiting positions at UC Berkeley (EECS) and Carnegie Mellon University (Machine Learning Department) during 2013-2014. His research bridges mathematical topics such as differential geometry, matrix analysis, convex analysis, probability theory, and optimization with AI. He founded the OPT (Optimization for Machine Learning) series of workshops, held from OPT2008–2017 at the NeurIPS conference. He has co-edited a book with the same name (MIT Press, 2011). He is also a co-founder and Chief Scientist of Pendulum, a global “Decision Aware AI” startup.

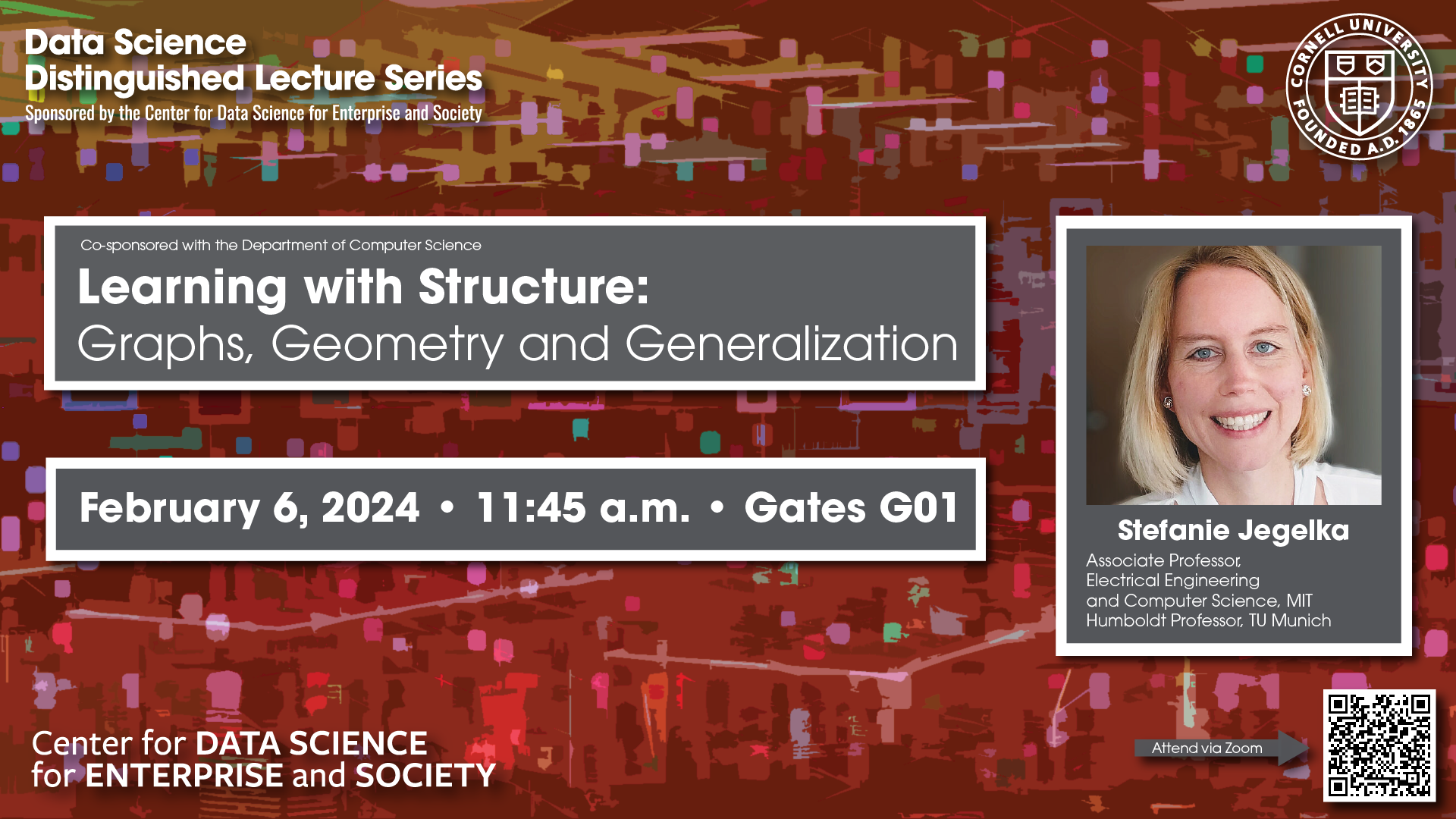

Tuesday, February 6

Stefanie Jegelka, MIT

Learning with Structure, Graphs, Geometry and Generalization

co-sponsored with the Department of Computer Science

ABSTRACT One grand goal of machine learning is to design widely applicable and resource-efficient learning models that are robust, even under commonly occurring distribution shifts in the input data. A promising step towards this goal is to understand and exploit “structure”, in the input data, latent space, model architecture and output. In this talk, I will illustrate examples of exploiting different types of structure in two main areas: representation learning on graphs and learning with symmetries.

First, graph representation learning has found numerous applications, including drug and materials design, traffic and weather forecasting, recommender systems and chip design. In many of these applications, it is important to understand (and improve) the robustness of relevant deep learning models. Here, we will focus on approaches to estimate and understand out-of-distribution predictions on graphs, e.g., training on small graphs and testing on large graphs with different degrees.

Second, in many applications, e.g. in chemistry, physics, biology or robotics, the data have important symmetries. Modeling such symmetries can help data efficiency and robustness of a model. Here, we will see an example of such a modeling task — neural networks on eigenvectors — and its benefits. Moreover, a formal, general analysis quantifies how symmetries improve data efficiency.

BIO Stefanie Jegelka is a Humboldt Professor at TU Munich and an Associate Professor in the Department of EECS at MIT. Before joining MIT, she was a postdoctoral researcher at UC Berkeley, and obtained her PhD from ETH Zurich and the Max Planck Institute for Intelligent Systems. Stefanie has received a Sloan Research Fellowship, an NSF CAREER Award, a DARPA Young Faculty Award, the German Pattern Recognition Award, a Best Paper Award at ICML and an invitation as sectional lecturer at the International Congress of Mathematicians. She has co-organized multiple workshops on (discrete) optimization in machine learning and graph representation learning, and has served as an Action Editor at JMLR and a program chair of ICML 2022. Her research interests span the theory and practice of algorithmic machine learning, in particular, learning problems that involve combinatorial, algebraic or geometric structure.

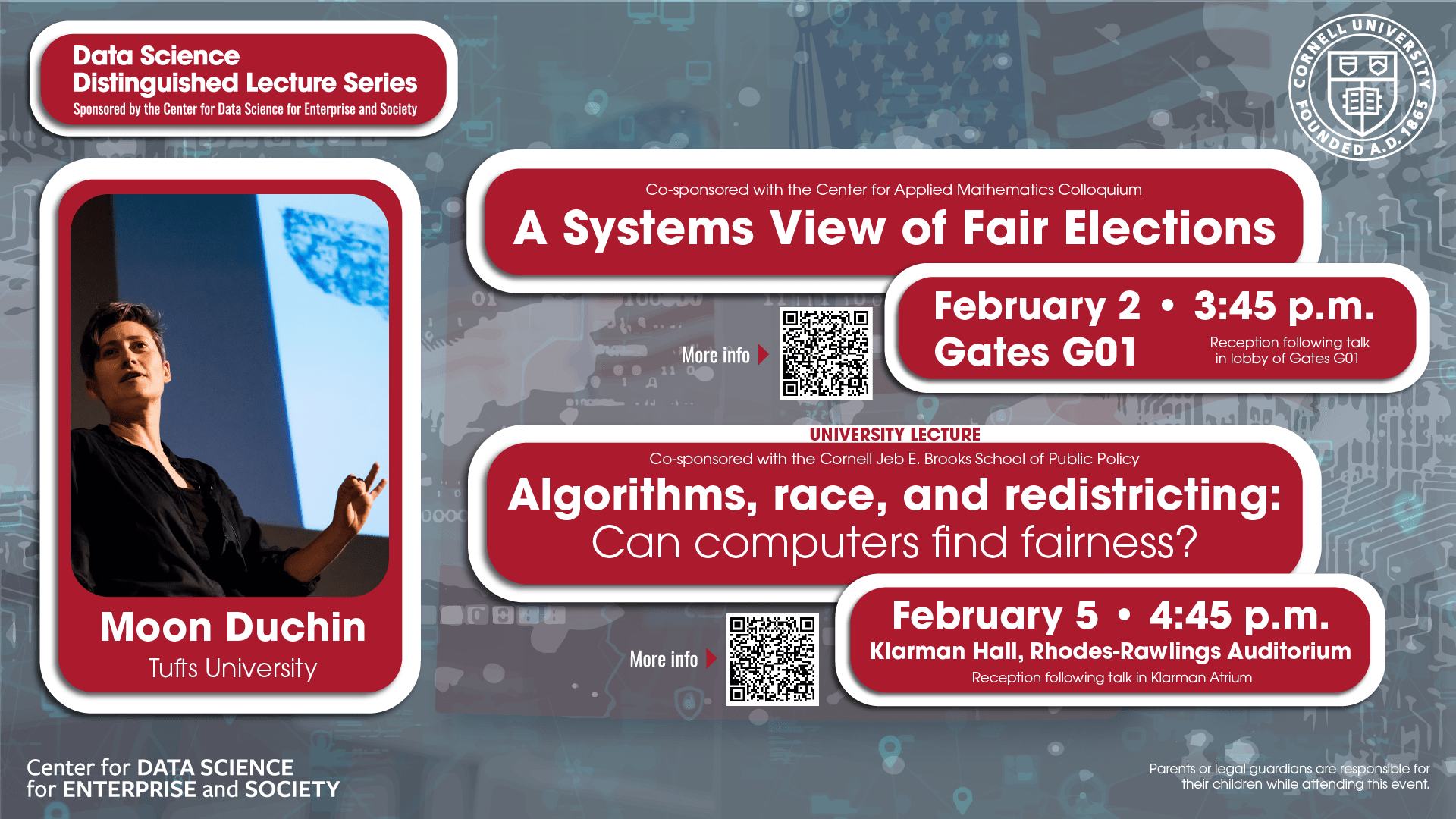

Monday, February 5

Moon Duchin, Tufts University

UNIVERSITY LECTURE

Algorithms, Race, and Redistricting: Can Computers Find Fairness?

This is a University Lecture and is co-sponsored with the Center for Data Science for Enterprise and Society and the Jeb E. Brooks School of Public Policy

Today’s Supreme Court is unmistakably inclined to reject the use of race-conscious measures in law and policy — as Chief Justice Roberts memorably put it, “The way to stop discrimination on the basis of race is to stop discriminating on the basis of race.” The 2023 term saw high-profile challenges to the use of race data in college admissions and in political redistricting. On the gerrymandering front, the state of Alabama asked the court to adopt a novel standard using algorithms to certify race-neutrality, on the principle that computers don’t know what you don’t tell them. But do blind approaches find fairness? In this talk, Professor Duchin will review the very interesting developments of the last few decades — and the last few months! — on algorithms, race, and redistricting.

BIO Moon Duchin is a Professor of Mathematics and a Senior Fellow in the Tisch College of Civic Life at Tufts University. Her pure mathematical work is in geometry, topology, groups, and dynamics, while her data science work includes collaborations in civil rights, political science, law, geography, and public policy on large-scale projects in elections and redistricting. She has recently served as an expert in redistricting litigation in Wisconsin, North Carolina, Alabama, South Carolina, Pennsylvania, Texas, and Georgia. Her work has been recognized with an NSF CAREER grant, a Guggenheim Fellowship, and a Radcliffe Fellowship, and she is a Fellow of the American Mathematical Society.

Friday, February 2

Moon Duchin, Tufts University

A Systems View of Fair Elections

This talk is part of the CAM Colloquium and Cornell’s Center for Data Science for Enterprise and Society’s Data Science Distinguished Lecture Series

ABSTRACT: The mathematical attention to voting systems has come in waves: the first wave was bound up with the development of probability, the second wave was axiomatic, and the third wave is computational. There are many beautiful results giving guarantees and obstructions when it comes to the provable properties of systems of election. But the axioms and objectives from this body of work are not a great match for the practical challenges of 21st century democracy. I will discuss ideas for bringing the tools of modeling and computation into closer conversation with the concerns of policymakers and reformers in the voting rights sphere. In particular, I’ll take a close look at ranked choice voting (or, as Politico recently called it, “the hottest political reform of the moment”).

Fall 2023: Data Science Distinguished Lecture Series

Monday, October 23

Bin Yu, UC Berkeley

Veridical Data Science Toward Trustworthy AI

Co-sponsored with the Department of Statistics and Data Science and the CS Theory Seminar

“AI is like nuclear energy–both promising and dangerous.”

Bill Gates, 2019

Data Science is central to AI and has driven most of recent advances in biomedicine and beyond. Human judgment calls are ubiquitous at every step of a data science life cycle (DSLC): problem formulation, data cleaning, EDA, modeling, and reporting. Such judgment calls are often responsible for the “dangers” of AI by creating a universe of hidden uncertainties well beyond sample-to-sample uncertainty.

To mitigate these dangers, veridical (truthful) data science is introduced based on three principles: Predictability, Computability and Stability (PCS). The PCS framework and documentation unify, streamline, and expand on the ideas and best practices of statistics and machine learning. In every step of a DSLC, PCS emphasizes reality check through predictability, considers computability up front, and takes into account of expanded uncertainty sources including those from data curation/cleaning and algorithm choice to build more trust in data results. PCS will be showcased through collaborative research in finding genetic drivers of a heart disease, stress-testing a clinical decision rule, and identifying microbiome-related metabolite signature for possible early cancer detection.

BIO Bin Yu is Chancellor’s Distinguished Professor and Class of 1936 Second Chair in Statistics, EECS, and Computational Biology at UC Berkeley. Her recent research focuses on statistical machine learning practice, algorithm, and theory, veridical data science for trustworthy AI, and interdisciplinary data problems in neuroscience, genomics, and precision medicine. She is a member of the U. S. National Academy of Sciences and American Academy of Arts and Sciences. She was a Guggenheim Fellow, Tukey Memorial Lecturer of the Bernoulli Society, and Rietz Lecturer of the Institute of Mathematical Statistics (IMS) , and won the E. L. Scott Award given by the Committee of Presidents of Statistical Societies (COPSS). She delivered the IMS Wald Lectures and the COPSS Distinguished Achievement Award and Lecture (DAAL) (formerly Fisher) at the Joint Statistical Meetings (JSM) in August, 2023. She holds an Honorary Doctorate from The University of Lausanne. She served on the inaugural scientific advisory board of the UK Turing Institute of Data Science and AI, and is serving on the editorial board of PNAS and as a senior advisor at the Simons Institute for the Theory of Computing at UC Berkeley.

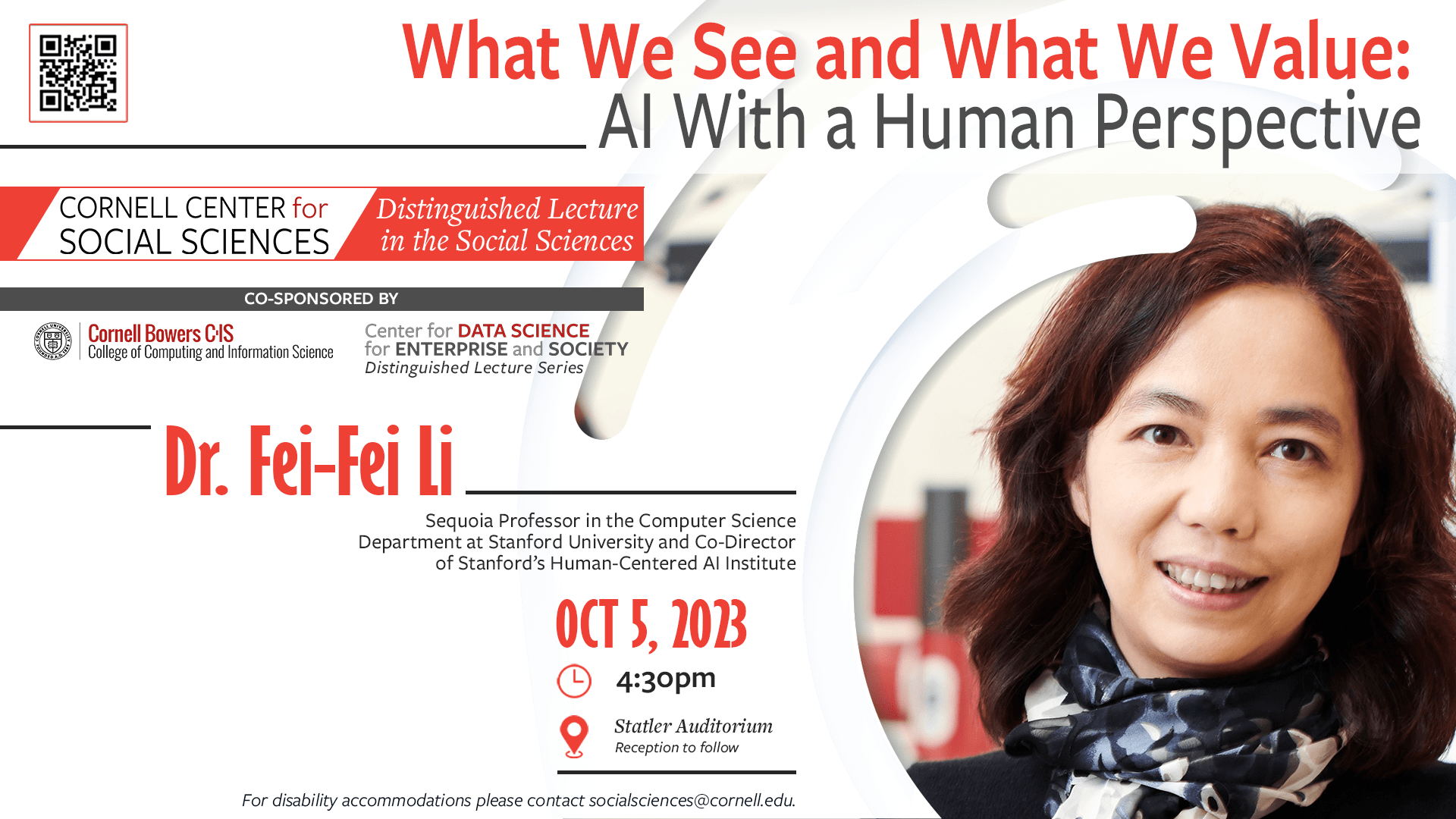

Thursday, October 5-6, 2023

Fei Fei Li, Stanford University

What We See and What We Value: AI With a Human Perspective

ABSTRACT In this talk, Dr. Li will present her research with students and collaborators to develop intelligent visual machines using machine learning and deep learning methods. The talk will focus on how neuroscience and cognitive science inspired the development of algorithms that enabled computers to see what humans see and how we can develop computer algorithms and applications to allow computers to see what humans don’t see. Dr. Li will also discuss social and ethical considerations about what we do not want to see or do not want to be seen, and corresponding work on privacy computing in computer vision, as well as the importance of addressing data bias in vision algorithms. She will conclude by discussing her current work in smart cameras and robots in healthcare as well as household robots as examples of AI’s potential to augment human capabilities.

BIO Dr. Fei-Fei Li is the inaugural Sequoia Professor in the Computer Science Department at Stanford University and Co-Director of Stanford’s Human-Centered AI Institute. She served as the Director of Stanford’s AI Lab from 2013 to 2018. From January 2017 to September 2018, she was Vice President at Google and served as Chief Scientist of AI/ML at Google Cloud. Dr. Fei-Fei Li obtained her B.A. degree in physics from Princeton in 1999 with High Honors and her Ph.D. degree in electrical engineering from California Institute of Technology (Caltech) in 2005.

Dr. Li’s current research interests include cognitively inspired AI, machine learning, deep learning, computer vision, and AI+healthcare, especially ambient intelligent systems for healthcare delivery. She has also worked on cognitive and computational neuroscience. Dr. Li has published over 200 scientific articles in top-tier journals and conferences and is the inventor of ImageNet and ImageNet Challenge, a critical large-scale dataset and benchmarking effort that has contributed to the latest developments in deep learning and AI. A leading national voice for advocating diversity in STEM and AI, she is co-founder and chairperson of the national non-profit AI4ALL, aimed at increasing inclusion and diversity in AI education. Dr. Li is an elected Member of the National Academy of Engineering (NAE), the National Academy of Medicine (NAM) and American Academy of Arts and Sciences (AAAS).

Tuesday, September 26, 2023

Stefan Wager, Stanford University

Treatment Effects in Market Equilibrium

Co-sponsored with the ORIE Colloquium

ABSTRACT When randomized trials are run in a marketplace equilibriated by prices, interference arises. To analyze this, we build a stochastic model of treatment effects in equilibrium. We characterize the average direct (ADE) and indirect treatment effect (AIE) asymptotically. A standard RCT can consistently estimate the ADE, but confidence intervals and AIE estimation require price elasticity estimates, which we provide using a novel experimental design. We define heterogeneous treatment effects and derive an optimal targeting rule that meets an equilibrium stability condition. We illustrate our results using a freelance labor market simulation and data from a cash transfer experiment.

BIO Stefan is an associate professor of Operations, Information, and Technology at the Stanford Graduate School of Business, and an associate professor of Statistics (by courtesy). His research lies at the intersection of causal inference, optimization, and statistical learning. He is particularly interested in developing new solutions to problems in statistics, economics and decision making that leverage recent advances in machine learning.

UNIVERSITY LECTURE

Wednesday, August 30

Andrew Piper, McGill University

Computational Narrative Understanding and the Human Desire to Make-Believe

This is a University Lecture co-sponsored with the IS Colloqium and The Center for Data Science

BIO Andrew Piper is Professor and William Dawson Scholar in the Department of Languages, Literatures, and Cultures at McGill University. He directs the Bachelor of Arts and Science program at McGill and is editor of the Journal of Cultural Analytics. His work focuses on using the tools of data science, machine learning, and natural language processing to study human storytelling. He is the director of .txtlab, a laboratory for cultural analytics, and author most recently of, Enumerations: Data and Literary Study (Chicago 2018) and Can We Be Wrong? The Problem of Textual Evidence in a Time of Data (Cambridge 2020).

ABSTRACT Narratives play an essential role in shaping human beliefs, fostering social change, and providing a sense of personal meaning, purpose and joy. Humans are in many ways primed for narrative. In this talk, I will share new work from my lab that leverages emerging techniques in computational narrative understanding to study human storytelling at large scale. What are the cues that signal to readers or listeners that narrative communication is happening? How do imaginary stories differ from true ones and what can this tell us about the value of fictional storytelling for everyday life? How might we imagine large-scale narrative observatories to measure public and political health and well-being? As we face growing skepticism around the purpose of humanistic study, this talk will argue that data-driven and fundamentally inter-disciplinary approaches to the study of storytelling can help restore public confidence in the humanities and initiate new pathways for research that address pressing public needs.

Spring 2023: Data Science Distinguished Lecture Series

Friday, March 10

Kristian Lum

University of Chicago Data Science Institute

Defining, Measuring, and Reducing Algorithmic Amplification

Co-sponsored with the AI Seminar and IS Colloquium

BIO Kristian Lum is an Associate Research Professor at the University of Chicago Data Science Institute. Previously, she was a Sr. Staff Machine Learning Researcher at Twitter where she led research as part of the Machine Learning Ethics, Transparency, and Accountability (META) team. She is a founding member of the ACM Conference on Fairness, Accountability, and Transparency and has served in various leadership roles since its inception, growing this community of scholars and practitioners who care about the responsible use of machine learning systems, and she is a recent recipient of the COPSS Emerging Leaders Award and NSF Kavli Fellow. Her research looks into (un)fairness of predictive models with particular attention to those used in a criminal justice setting.

ABSTRACT As people consume more content delivered by recommender systems, it has become increasingly important to understand how content is amplified by these recommendations. Much of the dialogue around algorithmic amplification implies that the algorithm is a single machine learning model acting on a neutrally defined, immutable corpus of content to be recommended. However, there are several other components of the system that are not traditionally considered part of the algorithm that influence what ends up on a user’s content feed. In this talk, I will enumerate some of these components that influence algorithmic amplification and discuss how these components can contribute to amplification and simultaneously confound its measurement. I will then discuss several proposals for mitigating unwanted amplification, even when it is difficult to measure precisely.

Wednesday, May 10 – 3:30 – 4:30 pm, Gates 114

Jessica Hullman

Northwestern University

Toward Robust Data Visualization for Inference

Co-sponsored with the IS Colloquium

BIO Dr. Jessica Hullman is the Ginni Rometty Associate Professor of Computer Science at Northwestern University. Her research addresses challenges that arise when people draw inductive inferences from data interfaces. Hullman’s work has contributed visualization techniques, applications, and evaluative frameworks for improving data-driven inference in applications like visual data analysis, data communication, privacy budget setting, and responsive design. Her current interests include theoretical frameworks for formalizing and evaluate the value of a better interface and elicitation of domain knowledge for data analysis. Hullman’s work has been awarded best paper awards at top visualization and HCI venues. She is the recipient of a Microsoft Faculty Fellowship (2019) and NSF CAREER, Medium, and Small awards as PI, among others.

ABSTRACT Research and development in computer science and statistics have produced increasingly sophisticated software interfaces for interactive visual data analysis, and data visualizations have become ubiquitous in communicative contexts like news and scientific publishing. However, despite these successes, our understanding of how to design robust visualizations for data-driven inference remains limited. For example, designing visualizations to maximize perceptual accuracy and users’ reported satisfaction can lead people to adopt visualizations that promote overconfident interpretations. Design philosophies that emphasize data exploration and hypothesis generation over other phases of analysis can encourage pattern-finding over sensitivity analysis and quantification of uncertainty. I will motivate alternative objectives for measuring the value of a visualization, and describe design approaches that better satisfy these objectives. I will discuss how the concept of a model check can help bridge traditionally exploratory and confirmatory activities, and suggest new directions for software and empirical research.

Fall 2022: Data Science Distinguished Lecture Series

November 15, 2022 @ 4:15 : Center for Data Science for Enterprise & Society, Data Science Distinguished Lecture Series

Nonlinear Optimization in the Presence of Noise

This talk is co-sponsored with the School of Operations Research and Information Engineering

Speaker: Jorge Nocedal

Date: Tuesday, November 15, 2022

Time/Location:

4:15 p.m. , Rhodes Hall, Room 253

Reception 3:45 p.m., Rhodes 258

Abstract: We begin by presenting three case studies that illustrate the nature of noisy optimization problems arising in practice. They originate in atmospheric sciences, machine learning, and engineering design. We wish to understand the source of the noise (e.g. a lower fidelity model, sampling or reduced precision arithmetic), its properties, and how to estimate it. This sets the stage for the presentation of our goal of redesigning constrained and unconstrained nonlinear optimization methods to achieve noise tolerance.

Bio: Jorge Nocedal is the Walter P. Murphy Professor in the Department of Industrial Engineering and Management Sciences at Northwestern University. He studied at UNAM (Mexico) and Rice University. His research is in optimization, both deterministic and stochastic, with emphasis on very large-scale problems. He is a SIAM Fellow, was awarded the 2012 George B. Dantzig Prize and the 2017 Von Neumann Theory Prize, for contributions to theory and algorithms of nonlinear optimization. He is a member of the US National Academy of Engineering.

October 24, 2022 : Center for Data Science for Enterprise & Society, Data Science Distinguished Lecture Series

Information Provision in Markets

This talk co-sponsored with Computer Science, Information Science, and ORIE

Time: 3:45 – 4:45 p.m.

Location: 114 Gates Hall

Reception prior at 3:15 in 122 Gates Hall

Abstract: Tech-mediated markets give individuals an unprecedented number of opportunities. Students no longer need to attend their neighborhood school; through school choice programs they can apply to any school in their city. Tourists no longer need to wander streets looking for restaurants; they can select among them on an app from the comfort of their hotel rooms. Theoretically, this increased access improves outcomes. However, realizing these potential gains requires individuals to be able to navigate their options and make informed decisions. In this talk, we explore how markets can help guide individuals through this process by providing relevant information. We first discuss a school choice market where students must exert costly effort to learn their preferences. We show that posting exam score cutoffs breaks information deadlocks allowing students to efficiently evaluate their options. We next study a recommendation app which can selectively reveal past reviews to users. We show that it’s possible to facilitate learning across users by creating a hierarchical network structure in which early users explore and late users exploit the results of this exploration.

Bio: Nicole Immorlica received her PhD in 2005 from MIT, joined Northwestern University as a professor in 2008, and joined Microsoft Research New England (MSR NE) in 2012 where she currently leads the economics and computation group. She is the recipient of a number of fellowships and awards including the Sloan Fellowship, the Microsoft Faculty Fellowship and the NSF CAREER Award. She has been on several boards including SIGecom, SIGACT, the Game Theory Society, and OneChronos; is an associate editor of Operations Research, Games and Economic Behavior and Transactions on Economics and Computation; and was program committee member and chair for several ACM, IEEE and INFORMS conferences in her area. In her research, Nicole uses tools and modeling concepts from computer science and economics to explain, predict, and shape behavioral patterns in various online and offline markets. She is known for her work on mechanism design, market design and social networks.

Spring 2022: Data Science Distinguished Lecture Series

Sponsored by the Center for Data Science for Enterprise & Society

School Choice in Chile

Speaker: José Correa

This talk is co-sponsored with the School of Operations Research Information Engineering (ORIE)

Date: Monday, April 25th

Reception: 9:30 a.m., Rhodes 258

Talk: 10 – 11 a.m., Rhodes 253

Watch the video of Jose’s talk

Policy Gradient Descent for Control: Global Optimality and Convex Parameterization

Speaker: Maryam Fazel, University of Washington

This talk is co-sponsored with the Center for Applied Mathematics (CAM)

Date: Friday, May 6

3:45 p.m., Rhodes 253

Watch the video of Maryam’s talk